is psychology suffering from a replication crisis? what does “failure to replicate†really mean?

The Replication Crisis in Psychology

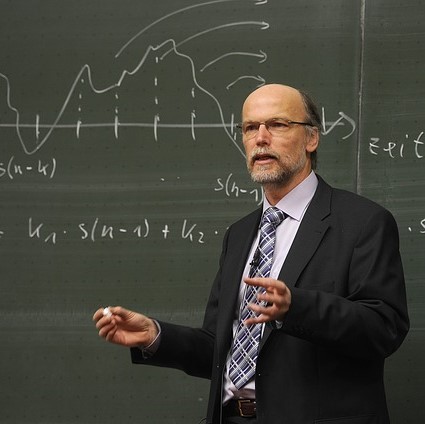

By and Robert Biswas-DienerUniversity of Utah, Academy of Virginia, Portland State University

In science, replication is the process of repeating research to determine the extent to which findings generalize beyond time and across situations. Recently, the science of psychology has come nether criticism considering a number of research findings do not replicate. In this module we discuss reasons for not-replication, the impact this miracle has on the field, and advise solutions to the problem.

Learning Objectives

- Define "replication"

- Explain the difference between verbal and conceptual replication

- Listing 4 explanations for non-replication

- Proper name 3 potential solutions to the replication crisis

The Disturbing Trouble

If you lot were driving down the road and you saw a pirate continuing at an intersection y'all might not believe your eyes. Just if yous continued driving and saw a second, and so a 3rd, you lot might get more confident in your observations. The more pirates you lot saw the less probable the first sighting would be a false positive (you were driving fast and the person was just wearing an unusual chapeau and billowy shirt) and the more likely it would be the outcome of a logical reason (in that location is a pirate themed briefing in town). This somewhat cool example is a real-life illustration of replication: the repeated findings of the same results.

The replication of findings is one of the defining hallmarks of scientific discipline. Scientists must be able to replicate the results of studies or their findings practice not become part of scientific knowledge. Replication protects against faux positives (seeing a result that is non really there) and also increases conviction that the result really exists. If you lot collect satisfaction data among homeless people living in Kolkata, India, for case, information technology might seem strange that they would report fairly high satisfaction with their nutrient (which is exactly what we found in Biswas-Diener & Diener, 2001). If you find the verbal aforementioned result, but at a different time, and with a dissimilar sample of homeless people living in Kolkata, nevertheless, you can feel more confident that this event is true (as we did in Biswas-Diener & Diener, 2006).

In modern times, the scientific discipline of psychology is facing a crisis. It turns out that many studies in psychology—including many highly cited studies—do not replicate. In an era where news is instantaneous, the failure to replicate enquiry raises important questions about the scientific procedure in general and psychology specifically. People take the correct to know if they can trust research evidence. For our part, psychologists also have a vested interest in ensuring that our methods and findings are as trustworthy as possible.

Psychology is not alone in coming up short on replication. At that place have been notable failures to replicate findings in other scientific fields too. For instance, in 1989 scientists reported that they had produced "common cold fusion," achieving nuclear fusion at room temperatures. This could accept been an enormous breakthrough in the advancement of clean energy. However, other scientists were unable to replicate the findings. Thus, the potentially important results did not become part of the scientific canon, and a new energy source did not materialize. In medical science equally well, a number of findings have been found not to replicate—which is of vital business organisation to all of society. The not-reproducibility of medical findings suggests that some treatments for disease could be ineffective. One instance of non-replication has emerged in the study of genetics and diseases: when replications were attempted to determine whether certain cistron-affliction findings held up, only about 4% of the findings consistently did so.

The non-reproducibility of findings is agonizing because information technology suggests the possibility that the original research was done sloppily. Even worse is the suspicion that the enquiry may accept been falsified. In science, faking results is the biggest of sins, the unforgivable sin, and for this reason the field of psychology has been thrown into an uproar. However, as nosotros volition discuss, there are a number of explanations for non-replication, and not all are bad.

What is Replication?

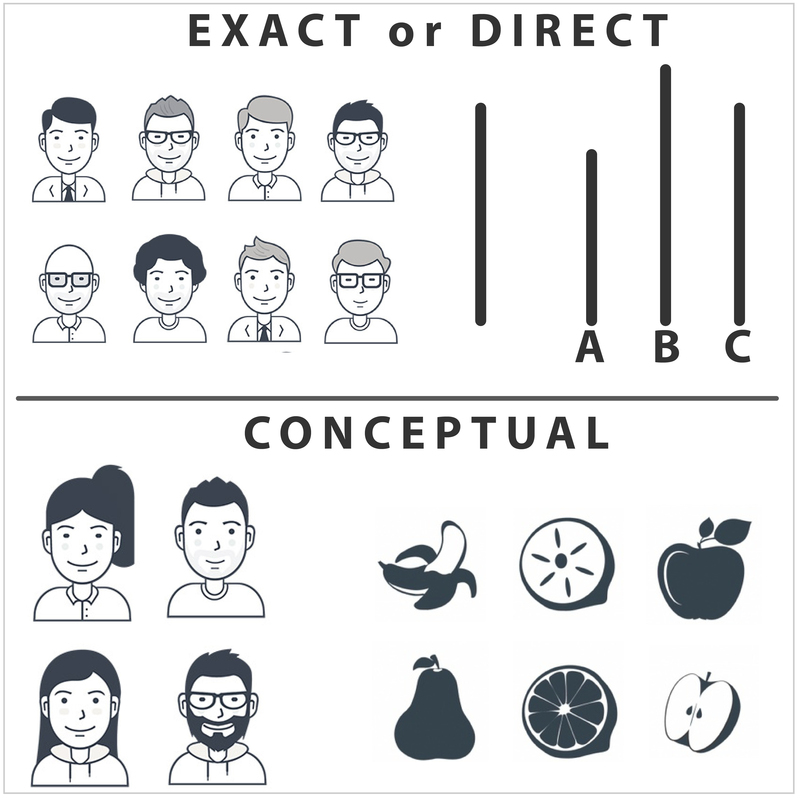

There are different types of replication. Outset, there is a type called "exact replication" (also chosen "direct replication"). In this course, a scientist attempts to exactly recreate the scientific methods used in conditions of an earlier written report to determine whether the results come out the same. If, for case, you wanted to exactly replicate Asch's (1956) archetype findings on conformity, yous would follow the original methodology: y'all would use only male participants, you lot would apply groups of 8, and you would nowadays the same stimuli (lines of differing lengths) in the same order. The second type of replication is chosen "conceptual replication." This occurs when—instead of an exact replication, which reproduces the methods of the earlier study every bit closely as possible—a scientist tries to confirm the previous findings using a unlike gear up of specific methods that test the aforementioned idea. The aforementioned hypothesis is tested, but using a different set of methods and measures. A conceptual replication of Asch's inquiry might involve both male person and female confederates purposefully misidentifying types of fruit to investigate conformity—rather than only males misidentifying line lengths.

Both exact and conceptual replications are important because they each tell us something new. Exact replications tell us whether the original findings are true, at least nether the exact conditions tested. Conceptual replications help ostend whether the theoretical idea behind the findings is true, and nether what weather condition these findings volition occur. In other words, conceptual replication offers insights into how generalizable the findings are.

Enormity of the Current Crisis

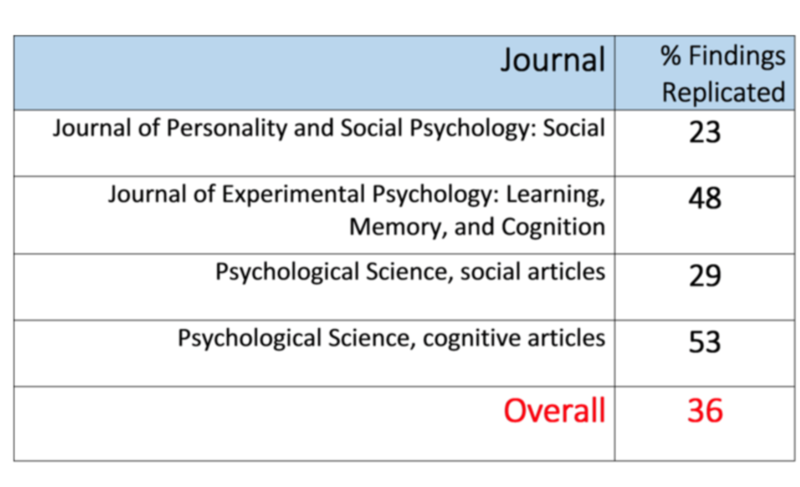

Recently, there has been growing business as psychological research fails to replicate. To requite you an idea of the extent of non-replicability of psychology findings, below are data reported in 2015 by the Open Science Collaboration project, led by University of Virginia psychologist Brian Nosek (Open Science Collaboration, 2015). Because these findings were reported in the prestigious periodical, Science, they received widespread attention from the media. Here are the percentages of inquiry that replicated—selected from several highly prestigious journals:

Clearly, there is a very large problem when only about one/3 of the psychological studies in premier journals replicate! It appears that this problem is peculiarly pronounced for social psychology but even the 53% replication level of cerebral psychology is cause for concern.

The situation in psychology has grown so worrisome that the Nobel Prize-winning psychologist Daniel Kahneman called on social psychologists to clean upwardly their act (Kahneman, 2012). The Nobel laureate spoke bluntly of doubts about the integrity of psychology enquiry, calling the current state of affairs in the field a "mess." His missive was pointed primarily at researchers who study social "priming," just in calorie-free of the non-replication results that have since come out, it might exist more aptly directed at the behavioral sciences in general.

Examples of Non-replications in Psychology

A big number of scientists take attempted to replicate studies on what might be called "metaphorical priming," and more frequently than not these replications have failed. Priming is the process past which a recent reference (often a subtle, subconscious cue) can increase the accessibility of a trait. For case, if your instructor says, "Please put aside your books, have out a clean canvas of paper, and write your name at the acme," yous might find your pulse quickening. Over time, you have learned that this cue means you are about to be given a popular quiz. This phrase primes all the features associated with pop quizzes: they are anxiety-provoking, they are catchy, your performance matters.

One example of a priming study that, at least in some cases, does not replicate, is the priming of the idea of intelligence. In theory, it might exist possible to prime number people to actually become more than intelligent (or perform better on tests, at to the lowest degree). For instance, in one study, priming students with the idea of a stereotypical professor versus soccer hooligans led participants in the "professor" condition to earn college scores on a trivia game (Dijksterhuis & van Knippenberg, 1998). Unfortunately, in several follow-up instances this finding has not replicated (Shanks et al, 2013). This is unfortunate for all of us because information technology would be a very easy mode to enhance our test scores and full general intelligence. If only it were true.

Another example of a finding that seems non to replicate consistently is the use of spatial distance cues to prime people's feelings of emotional closeness to their families (Williams & Bargh, 2008). In this type of report, participants are asked to plot points on graph paper, either close together or far apart. The participants are so asked to rate how close they are to their family members. Although the original researchers establish that people who plotted close-together points on graph paper reported being closer to their relatives, studies reported on PsychFileDrawer—an cyberspace repository of replication attempts—propose that the findings frequently exercise not replicate. Over again, this is unfortunate considering it would be a handy way to help people feel closer to their families.

Equally 1 tin run across from the examples, some of the studies that fail to replicate report extremely interesting findings—fifty-fifty counterintuitive findings that announced to offer new insights into the human being mind. Critics claim that psychologists have get also enamored with such newsworthy, surprising "discoveries" that receive a lot of media attending. Which raises the question of timing: might the electric current crisis of non-replication exist related to the modern, media-hungry context in which psychological enquiry (indeed, all research) is conducted? Put some other way: is the non-replication crunch new?

Nobody has tried to systematically replicate studies from the past, so we do not know if published studies are condign less replicable over fourth dimension. In 1990, however, Amir and Sharon were able to successfully replicate nigh of the main effects of six studies from another culture, though they did fail to replicate many of the interactions. This particular shortcoming in their overall replication may suggest that published studies are becoming less replicable over fourth dimension, but nosotros cannot be certain. What we can be sure of is that there is a significant trouble with replication in psychology, and it'southward a trend the field needs to right. Without replicable findings, nobody will be able to believe in scientific psychology.

Reasons for Not-replication

When findings do not replicate, the original scientists sometimes become indignant and defensive, offer reasons or excuses for non-replication of their findings—including, at times, attacking those attempting the replication. They sometimes merits that the scientists attempting the replication are unskilled or unsophisticated, or do not accept sufficient feel to replicate the findings. This, of form, might exist true, and information technology is one possible reason for not-replication.

I reason for defensive responses is the unspoken implication that the original results might accept been falsified. Faked results are but i reason studies may not replicate, simply it is the most disturbing reason. We hope faking is rare, but in the past decade a number of shocking cases have turned up. Perchance the most well-known come from social psychology. Diederik Stapel, a renowned social psychologist in the netherlands, admitted to faking the results of a number of studies. Marc Hauser, a popular professor at Harvard, apparently faked results on morality and cognition. Karen Ruggiero at the University of Texas was likewise found to have falsified a number of her results (proving that bad behavior doesn't have a gender bias). Each of these psychologists—and there are quite a few more examples—was believed to take faked data. Later on, they all were disgraced and lost their jobs.

Another reason for non-replication is that, in studies with pocket-sized sample sizes, statistically-significant results may oftentimes be the result of run a risk. For example, if you ask five people if they believe that aliens from other planets visit Earth and regularly housebreak humans, you may get three people who concur with this notion—only by take a chance. Their answers may, in fact, not exist at all representative of the larger population. On the other mitt, if y'all survey one m people, there is a college probability that their belief in conflicting abductions reflects the actual attitudes of society. At present consider this scenario in the context of replication: if you try to replicate the first study—the one in which you lot interviewed simply five people—there is just a small chance that you lot will randomly draw 5 new people with exactly the aforementioned (or similar) attitudes. Information technology's far more likely that y'all will exist able to replicate the findings using another large sample, because it is simply more likely that the findings are accurate.

Some other reason for not-replication is that, while the findings in an original study may be true, they may simply be true for some people in some circumstances and non necessarily universal or enduring. Imagine that a survey in the 1950s found a strong majority of respondents to accept trust in authorities officials. Now imagine the same survey administered today, with vastly unlike results. This example of non-replication does not invalidate the original results. Rather, information technology suggests that attitudes have shifted over time.

A last reason for non-replication relates to the quality of the replication rather than the quality of the original report. Non-replication might be the product of scientist-error, with the newer investigation not following the original procedures closely enough. Similarly, the attempted replication study might, itself, accept likewise small a sample size or insufficient statistical power to notice significant results.

In Defence of Replication Attempts

Failures in replication are not all bad and, in fact, some non-replication should be expected in scientific discipline. Original studies are conducted when an respond to a question is uncertain. That is to say, scientists are venturing into new territory. In such cases nosotros should expect some answers to be uncovered that will not pan out in the long run. Furthermore, we promise that scientists take on challenging new topics that come with some amount of gamble. After all, if scientists were only to publish safe results that were easy to replicate, we might have very boring studies that exercise not advance our knowledge very apace. But, with such risks, some non-replication of results is to be expected.

A recent example of hazard-taking can be seen in the research of social psychologist Daryl Bem. In 2011, Bem published an article challenge he had found in a number of studies that future events could influence the by. His proposition turns the nature of time, which is assumed by virtually everyone except science fiction writers to run in one direction, on its head. Needless to say, attacks on Bem's article came fast and furious, including attacks on his statistics and methodology (Ritchie, Wiseman & French, 2012). At that place were attempts at replication and most of them failed, but not all. A twelvemonth afterwards Bem's article came out, the prestigious journal where information technology was published, Journal of Personality and Social Psychology, published another newspaper in which a scientist failed to replicate Bem'southward findings in a number of studies very similar to the originals (Galak, Lebeouf, Nelson & Simmons, 2012).

Some people viewed the publication of Bem's (2011) original study as a failure in the system of science. They argued that the newspaper should not have been published. Just the editor and reviewers of the article had moved frontwards with publication considering, although they might take thought the findings provocative and unlikely, they did not see obvious flaws in the methodology. We see the publication of the Bem paper, and the ensuing contend, as a force of science. We are willing to consider unusual ideas if in that location is show to support them: nosotros are open-minded. At the same time, we are critical and believe in replication. Scientists should be willing to consider unusual or risky hypotheses simply ultimately let good evidence to have the terminal say, not people's opinions.

Solutions to the Trouble

Dissemination of Replication Attempts

- Psychfiledrawer.org: Archives attempted replications of specific studies and whether replication was achieved.

- Middle for Open Science: Psychologist Brian Nosek, a champion of replication in psychology, has created the Open up Science Framework, where replications tin can exist reported.

- Association of Psychological Science: Has registered replications of studies, with the overall results published in Perspectives on Psychological Science.

- Plos One: Public Library of Science—publishes a broad range of articles, including failed replications, and there are occasional summaries of replication attempts in specific areas.

- The Replication Index: Created in 2014 past Ulrich Schimmack, the so-called "R Index" is a statistical tool for estimating the replicability of studies, of journals, and even of specific researchers. Schimmack describes information technology as a "doping test".

The fact that replications, including failed replication attempts, now have outlets where they can exist communicated to other researchers is a very encouraging development, and should strengthen the scientific discipline considerably. One trouble for many decades has been the near-impossibility of publishing replication attempts, regardless of whether they've been positive or negative.

More Systematic Programs of Scientific Research

The reward structure in academia has served to discourage replication. Many psychologists—especially those who piece of work full time at universities—are ofttimes rewarded at work—with promotions, pay raises, tenure, and prestige—through their research. Replications of i's own earlier work, or the work of others, is typically discouraged considering information technology does not represent original thinking. Instead, academics are rewarded for high numbers of publications, and flashy studies are often given prominence in media reports of published studies.

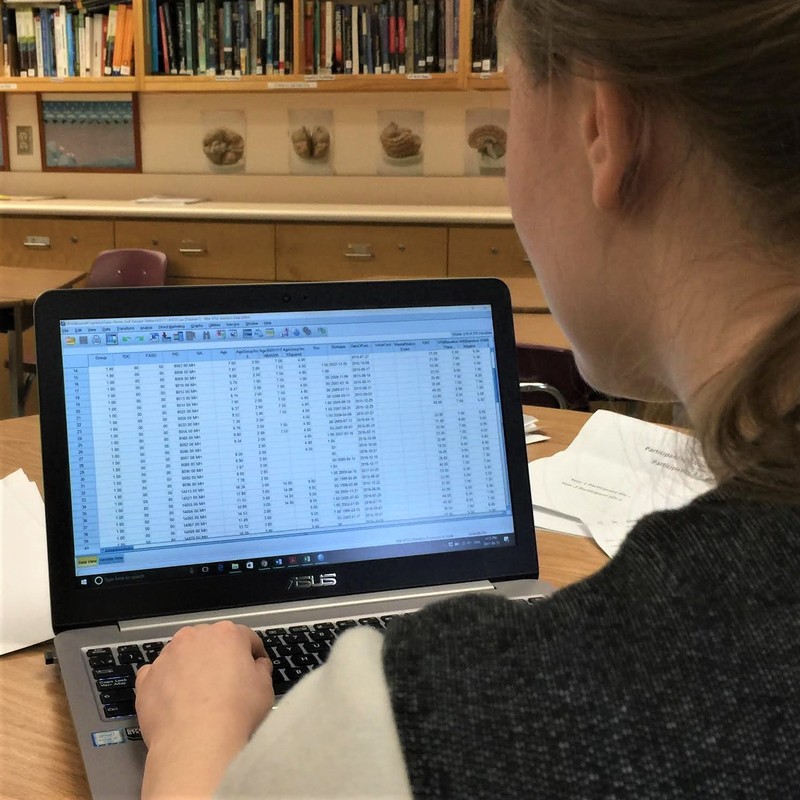

Psychological scientists need to carefully pursue programmatic research. Findings from a unmarried study are rarely adequate, and should be followed upwards by additional studies using varying methodologies. Thinking about enquiry this way—as if it were a program rather than a single study—can help. We would recommend that laboratories conduct conscientious sets of interlocking studies, where important findings are followed up using various methods. It is not sufficient to notice some surprising outcome, report information technology, and then move on. When findings are important enough to be published, they are oft important plenty to prompt farther, more conclusive research. In this fashion scientists will discover whether their findings are replicable, and how broadly generalizable they are. If the findings exercise non always replicate, but do sometimes, we will learn the weather in which the blueprint does or doesn't concord. This is an important function of science—to notice how generalizable the findings are.

When researchers criticize others for being unable to replicate the original findings, saying that the conditions in the follow-up study were inverse, this is of import to pay attention to as well. Not all criticism is knee-jerk defensiveness or resentment. The replication crisis has stirred heated emotions amid research psychologists and the public, only it is fourth dimension for us to calm down and return to a more scientific attitude and system of programmatic inquiry.

Textbooks and Journals

Some psychologists blame the trend toward non-replication on specific journal policies, such as the policy of Psychological Scientific discipline to publish short single studies. When single studies are published we do not know whether fifty-fifty the authors themselves can replicate their findings. The periodical Psychological Scientific discipline has come under peradventure the harshest criticism. Others blame the rash of nonreplicable studies on a trend of some fields for surprising and counterintuitive findings that grab the public involvement. The irony here is that such counterintuitive findings are in fact less likely to exist truthful precisely because they are so strange—and so they should perhaps warrant more scrutiny and further analysis.

The criticism of journals extends to textbooks as well. In our opinion, psychology textbooks should stress true scientific discipline, based on findings that accept been demonstrated to exist replicable. There are a number of inaccuracies that persist across mutual psychology textbooks, including small mistakes in common coverage of the near famous studies, such equally the Stanford Prison house Experiment (Griggs & Whitehead, 2014) and the Milgram studies (Griggs & Whitehead, 2015). To some extent, the inclusion of non-replicated studies in textbooks is the product of market forces. Textbook publishers are under pressure level to release new editions of their books, often far more oftentimes than advances in psychological science truly justify. Equally a outcome, at that place is pressure to include "sexier" topics such as controversial studies.

Ultimately, people too need to learn to be intelligent consumers of science. Instead of getting overly-excited by findings from a single report, it'southward wise to wait for replications. When a corpus of studies is built on a miracle, we can begin to trust the findings. Journalists must be educated about this as well, and learn non to readily broadcast and promote findings from single flashy studies. If the results of a study seem too expert to be true, perhaps they are. Everyone needs to take a more skeptical view of scientific findings, until they have been replicated.

Outside Resources

- Commodity: New Yorker commodity on the "replication crunch"

- http://www.newyorker.com/tech/elements/the-crunch-in-social-psychology-that-isnt

- Web: Collaborative Replications and Pedagogy Project - This is a replication project where students are encouraged to conduct replications every bit part of their courses.

- https://osf.io/wfc6u/

- Web: Commentary on what makes for a convincing replication.

- http://papers.ssrn.com/sol3/papers.cfm?abstract_id=2283856

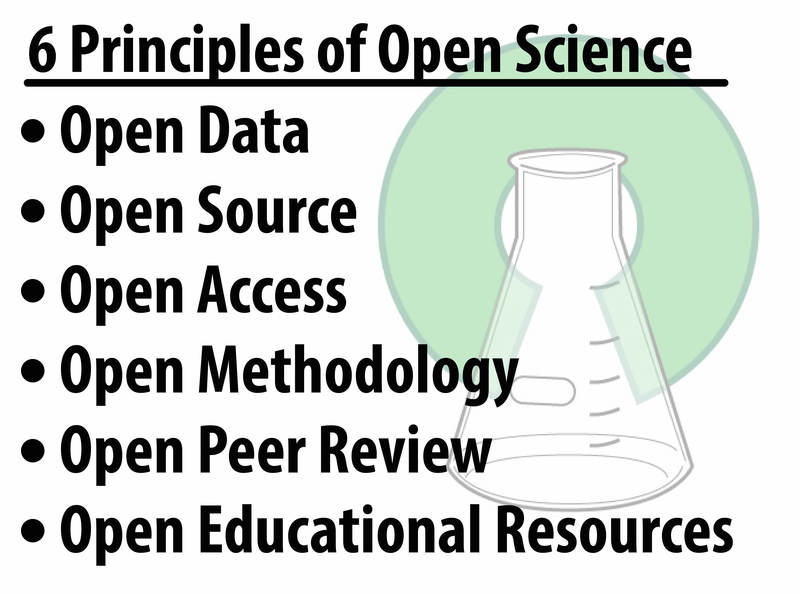

- Web: Open up Science Framework - The Open Scientific discipline Framework is an open up source software projection that facilitates open collaboration in science research.

- https://osf.io/

- Web: Psych File Drawer - A website created to address "the file drawer problem". PsychFileDrawer.org allows users to upload results of serious replication attempts in all research areas of psychology.

- http://psychfiledrawer.org/

Give-and-take Questions

- Why do scientists run across replication by other laboratories every bit being and so crucial to advances in science?

- Do the failures of replication shake your organized religion in what you have learned about psychology? Why or why non?

- Tin can you call back of any psychological findings that you think might not replicate?

- What findings are so important that you remember they should be replicated?

- Why do you lot think quite a few studies do non replicate?

- How oft do you think faking results occurs? Why? How might we preclude that?

Vocabulary

- Conceptual Replication

- A scientific attempt to copy the scientific hypothesis used in an earlier study in an endeavor to decide whether the results volition generalize to different samples, times, or situations. The same—or like—results are an indication that the findings are generalizable.

- Amalgamated

- An role player working with the researcher. Most often, this individual is used to deceive unsuspecting inquiry participants. Also known equally a "stooge."

- Verbal Replication (likewise called Directly Replication)

- A scientific attempt to exactly copy the scientific methods used in an earlier study in an attempt to determine whether the results are consistent. The same—or similar—results are an indication that the findings are accurate.

- Falsified data (faked data)

- Data that are fabricated, or made up, by researchers intentionally trying to laissez passer off inquiry results that are inaccurate. This is a serious ethical breach and tin can even exist a criminal offence.

- Priming

- The process by which exposing people to one stimulus makes certain thoughts, feelings or behaviors more salient.

- Sample Size

- The number of participants in a study. Sample size is important considering information technology can influence the confidence scientists accept in the accuracy and generalizability of their results.

References

- Amir, Y., & Sharon, I. (1990). Replication research: A "must" for the scientific advancement of psychology. Journal of Social Behavior and Personality, Special Upshot, 5, 51-69.

- Asch, S. E. (1956). Studies of independence and conformity: I. A minority of i against a unanimous majority. Psychological Monographs, 70 (ix, Whole No. 416).

- Bem, DJ (March 2011). "Feeling the future: experimental evidence for anomalous retroactive influences on knowledge and impact." Journal of personality and social psychology, 100, 407–25.

- Biswas-Diener, R., & Diener, Due east. (2006). Subjective well-being of the homeless, and lessons for happiness. Social Indicators Research. 76, 185-205.

- Biswas-Diener, R. , & Diener, E. (2001). Making the all-time of a bad situation: Satisfaction in the slums of Calcutta. Social Indicators Research, 55, 329-352.

- Dijksterhuis, A., & van Knippenberg, A. (1998). The relation between perception and beliefs or how to win a game of Trivial Pursuit. Journal of Personality and Social Psychology, 74, 865–877.

- Galak, J., LeBoeuf, R. A., Nelson, Fifty. D., & Simmons, J. P. (2012, August 27). Correcting the By: Failures to Replicate Psi. Journal of Personality and Social Psychology.

- Griggs & Whitehead (2015). Coverage of Milgram'southward obedience experiments in social psychology textbooks: Where have all the criticisms gone? Teaching of Psychology, 42, 315-322.

- Griggs, R. A. & Whitehead, Thou. I. (2014). Coverage of the Stanford Prison Experiment in Introductory Social Psychology textbooks. Teaching of Psychology, 41, 318-324.

- Kahneman, D. (2012). A proposal to bargain with questions almost priming furnishings. An open alphabetic character to the scientific community: http://www.nature.com/polopoly_fs/7.6716.1349271308!/suppinfoFile/Kahneman%20Letter.pdf

- Open Science Collaboration (2015). Estimating the reproducibility of psychological scientific discipline. Science, 349.

- Ritchie, S. J., Wiseman, R., & French, C. C. (2012). Failing the future: Three unsuccessful attempts to replicate Bem'south 'retroactive facilitation of call back' effect. PLOS One. DOI: 10.1371/periodical.pone.0033423

- Shanks, D. R., Newell, B., Lee, East. H., Balikrishnan, D., Ekelund, 50., Cenac, Z., Kavvadia, F. & Moore, C. (2013). Priming intelligent behavior: Elusive phenomenon. PLOS One. DOI: 10.1371/journal.pone.0056515

- Williams, L. Eastward., & Bargh, J. A. (2008). Keeping one'south distance: The influence of spatial distance cues on touch on and evaluation. Psychological Scientific discipline, 19, 302-308.

Authors

Creative Commons License

The Replication Crisis in Psychology by Edward Diener and Robert Biswas-Diener is licensed nether a Creative Eatables Attribution-NonCommercial-ShareAlike 4.0 International License. Permissions beyond the scope of this license may be available in our Licensing Understanding.

The Replication Crisis in Psychology by Edward Diener and Robert Biswas-Diener is licensed nether a Creative Eatables Attribution-NonCommercial-ShareAlike 4.0 International License. Permissions beyond the scope of this license may be available in our Licensing Understanding. How to cite this Noba module using APA Style

Diener, East. & Biswas-Diener, R. (2022). The replication crisis in psychology. In R. Biswas-Diener & E. Diener (Eds), Noba textbook series: Psychology. Champaign, IL: DEF publishers. Retrieved from http://noba.to/q4cvydehsommerfieldhappely.blogspot.com

Source: https://nobaproject.com/modules/the-replication-crisis-in-psychology

0 Response to "is psychology suffering from a replication crisis? what does “failure to replicate†really mean?"

Post a Comment